Project Case Study

Telly: BISINDO Sign Language Learning

iOSSwiftUICreate MLSwiftData

Video Demo

App Screens

Swipe to see more →

Overview

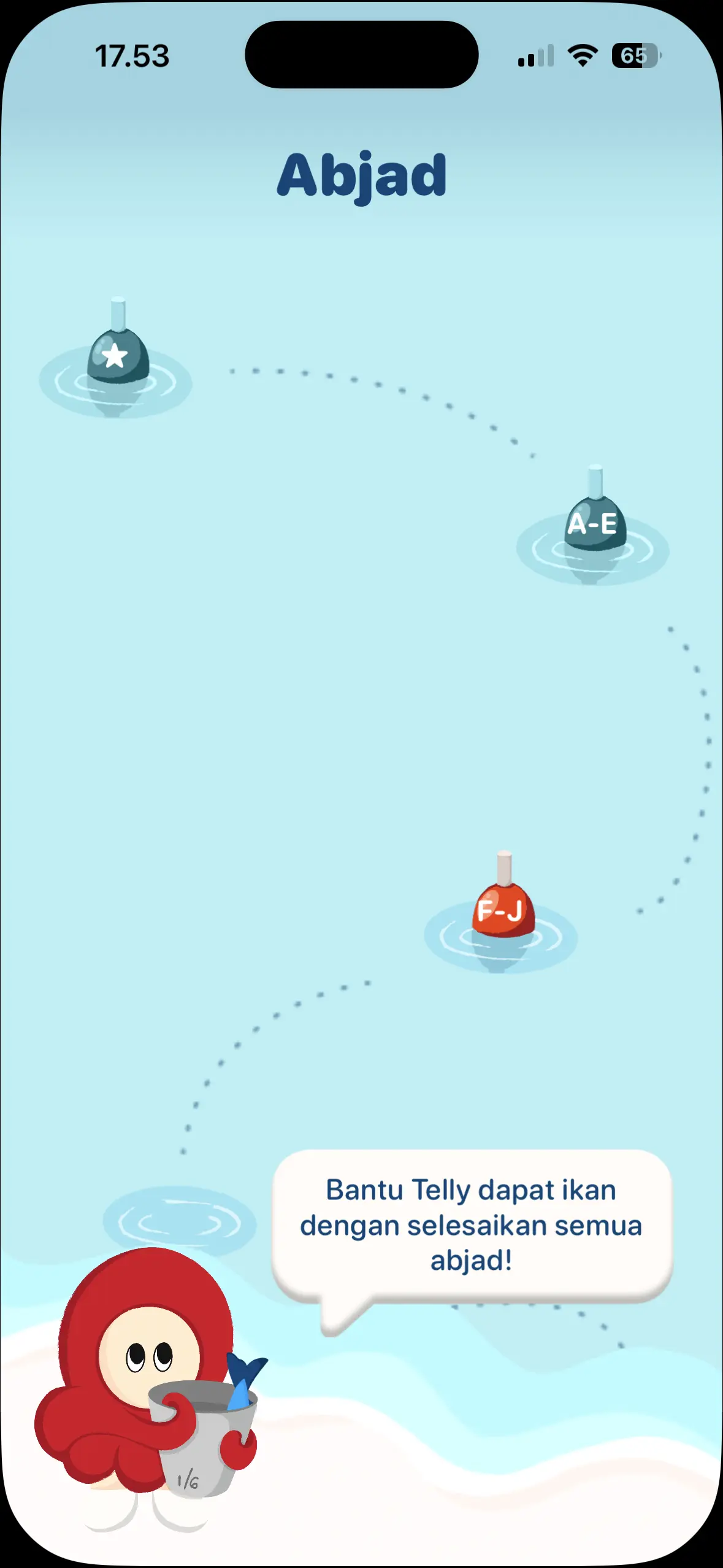

A native iOS app teaching Indonesian Sign Language (BISINDO) through real-time gesture recognition.

What it does

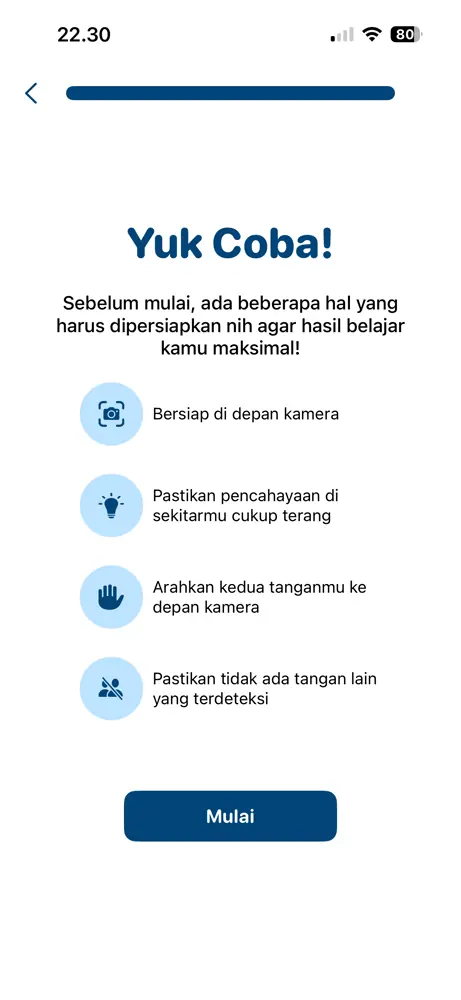

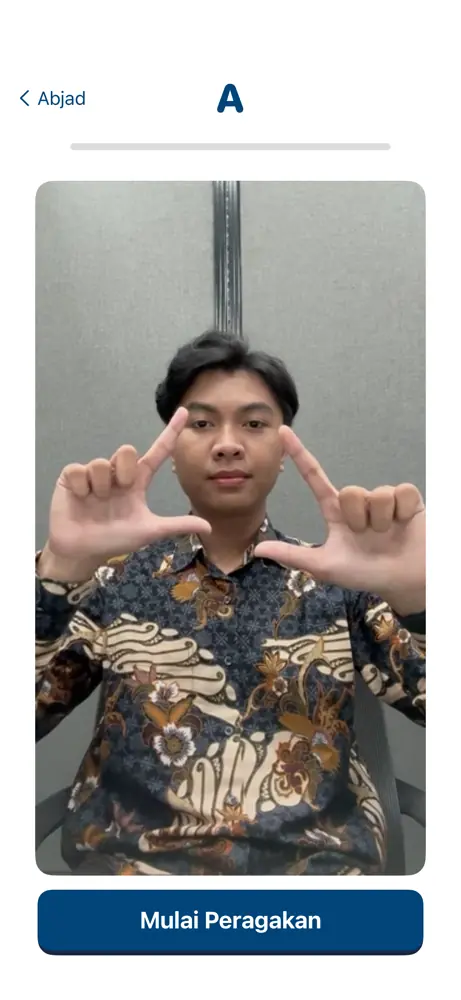

Users learn BISINDO signs by watching demonstrations, then practicing with their device"""s camera. The app uses on-device machine learning to verify their hand shapes and provide instant feedback.

How I built it

- On-Device Vision: Trained Create ML models to recognize 50+ BISINDO signs, optimized for low-latency execution directly on the device.

- Reactive SwiftUI UI: Built an interactive course system with real-time camera overlays providing instant gesture feedback.

- Local Data Persistence: Used SwiftData to manage user progress, lesson states, and achievement history without server dependency.

Challenges

The hardest part was getting enough training data—BISINDO resources are scarce compared to ASL. I had to film and annotate my own reference videos, then augment the dataset with rotations and lighting variations to make the model robust to different environments.

Balancing model accuracy with file size was tricky since the entire model ships with the app.